We’ve all heard the promise of SASE. It will simplify networks, strengthen security, and give organizations better control via one unified cloud service. But in practice, many customers find themselves waiting far longer than expected to see those benefits.

Surprisingly, the root of the problem is often the very first stage of deployment: connecting the customer’s edge infrastructure to the provider’s cloud service. This step sounds straightforward, but it turns out to be more complicated, costly, and time‑consuming than anyone would care to admit. And because of how SASE projects are usually scoped and budgeted, those early delays can ripple through the entire rollout and throw the entire project off course.

Let’s break down why this first step has become such a bottleneck and how vendors and channel partners can avoid stalled projects and unmet expectations.

Phase One: Why Is It So Much Harder Than It Looks?

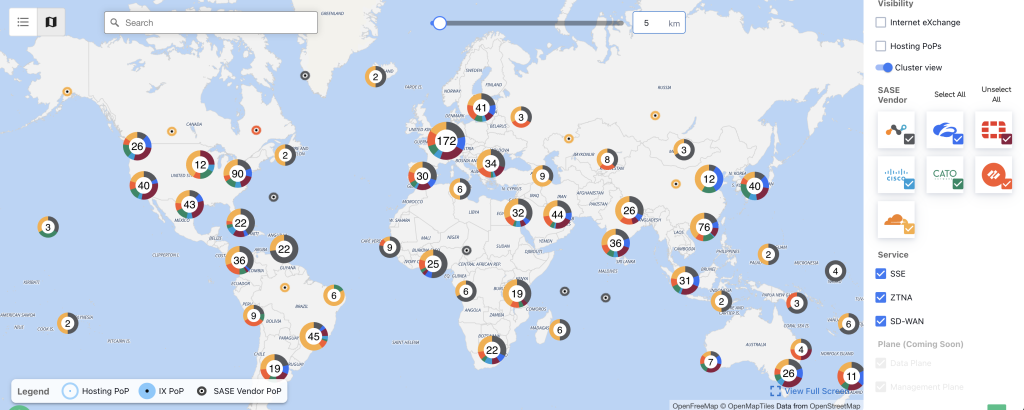

In most SASE projects, deployment is divided into stages. The first stage is typically about establishing secure connectivity: setting up tunnels from customer edge devices to the provider’s points of presence (PoPs), configuring routing, policies, and authentication, and testing performance.

While the design may look simple on a slide, the real‑world work is rarely straightforward. Teams have to integrate legacy firewalls, MPLS, or SD‑WAN configurations; resolve DNS and certificate issues; and plan for failover scenarios. These are often highly manual processes that require coordination between customer networking teams, the SASE provider’s engineers, and sometimes external partners.

As a result, the timeline for this first phase is often underestimated. What was supposed to take weeks can stretch into months and months.

The Risks of Getting It Wrong

Beyond project delays, mistakes or shortcuts in this first step can introduce serious operational and security risks. Misconfigured tunnels can cause tunnel flapping, which leads to unpredictable connectivity and degraded user experience. Poor routing policies or certificate mismanagement can open the door to service outages that disrupt critical business traffic.

Even seemingly small manual errors in tunnel setup or policy assignment can create security gaps, leaving some user traffic unprotected or bypassing inspection altogether. In large, distributed environments, the sheer volume of manual tunnel configurations increases the chance of inconsistency across sites. And when multiple vendors, PoPs, or migration phases are involved, these risks multiply. This puts uptime, security, and reputation at risk.

That’s why while this phase is often underestimated, it’s also the phase where precision matters most. This explains why proper execution often demands more time and specialized expertise than even the most experienced teams can struggle to accurately predict.

The Magical Case of the Disappearing Professional Services Budget

One of the biggest operational consequences of these delays is financial. Many SASE vendors scope professional services (PS) work in phases, aligning budget and engineering hours to each stage. When this first phase drags out longer than expected, it quickly consumes the allocated PS hours for the entire rollout.

This leaves two choices:

- Press pause on the entire SASE rollout and request additional budget approvals. This can introduce further delays as the frustrated customer works to free up additional budget.

- Continue with the project as planned, only without sufficient engineering support. This approach risks security oversights and additional delays.

As you can imagine, neither path is appealing for customers or vendors. The result is that the project’s later stages, like rolling out ZTNA, cloud firewalling, or advanced data protection, can be postponed or only partially delivered. Customers end up paying significant implementation costs yet fail to see the promised SASE benefits.

Why customers feel frustrated

From the customer’s perspective, this scenario is especially painful. They sign an expensive contract for a platform that promises simpler operations, improved security, and unified policy management. But until all the pieces are in place, those benefits remain theoretical.

As TechTarget aptly points out: “SASE offers companies a compelling security strategy, but it takes time to ensure network teams have the visibility and management oversight they need.”

That visibility and control only come once traffic is actually routed through the SASE service. And as many customers are now discovering, that benefit is wholly dependent on the first step being fully deployed.

What makes this first phase so time‑consuming?

While different vendors use slightly different terms, this challenge shows up across the industry. Analysts agree that phased rollouts (where connectivity comes first, and security policies and data protection follow) are necessary to reduce risk, but they also introduce complexity.

- Orchestrating tunnels across diverse, multi-vendor edges drives up labor costs and delays service activation. Each customer often has a mix of branch appliances, CPEs, and cloud gateways, sometimes from different vendors or generations of hardware. Engineers must manually configure IPsec or GRE tunnels to multiple PoPs, test failover, and validate performance. Without automation, every new branch or vendor edge device added means another manual tunnel configuration — making the rollout both slower and more expensive.

- Integrating legacy infrastructure is frequently required, introducing added friction to onboarding and service delivery. Most enterprises have existing firewalls, VPN concentrators, or MPLS circuits that can’t be removed overnight. The SASE edge must be integrated carefully to avoid disrupting production traffic. This adds extra design, testing, and phased cutovers — each requiring specialized knowledge and often unique configurations for each customer’s environment.

- Managing migrations between vendors, edges, or PoPs adds a lifecycle operational burden to delivery teams and channel partners. Beyond initial deployment, SASE services evolve. Customers might migrate traffic from one PoP to another for optimization, or replace hardware at the edge. Each migration introduces operational overhead: new tunnel setups, certificate updates, route changes, and live cutovers. Without orchestration tools, delivery teams end up handling these changes manually, increasing risk and further extending timelines.

The bottom line

The promise of SASE is real. But too often, the deceptively simple first phase of deployment becomes the biggest barrier to realizing that promise.

Delays in connecting the customer edge to the SASE provider’s infrastructure can cascade through the entire project, draining professional services budgets and leaving customers waiting months before they see meaningful benefits.

By understanding why this happens, vendors and channel partners can begin to take proactive steps to plan, scope, and align early. Most importantly, they can identify the right technologies to automate and overcome these challenges to deliver SASE projects on-time and on-budget, for optimal customer satisfaction. To learn more about SASE OpsLab’s marketplace for prepackaged deployment and migration solutions, visit sase-opslab.com.